Cognitive science can’t settle questions of right and wrong. But it can set the table for theories of rational decision-making and ethics. And the one thing we know for sure about how we think is that, at the most fundamental level, our brains are connection systems that are changed by experience. This, unlike theories of consciousness, is not really up for debate. Transformational experiences and self-altering decisions exist because of the way we think. Our minds change. They change in the shallow sense of changing what we remember, the facts we know or the beliefs we hold…but they also change in a much deeper sense. Experience changes, in fundamental ways, how we think and who we are.

In this second part of a series on cognitive science and its implications for decision making and ethics, TW2BR focuses on the central role of prediction in how we think.This role starts with a problem right at the heart of the idea that brains are connection systems that require training. In computer science applications, neural networks are trained with data that includes a “label” – the correct answer. A picture of a dog includes a label, “dog”. If the picture doesn’t contain a dog, the label is “no dog”. The connection system trains itself by adjusting its internal connections to create outputs more likely to match the labels it’s given. This works fine in the computer lab, but as we all know, the world does not come equipped with labels!

The power of connection architectures has been demonstrated in countless real-world problems based on this kind of training process. But understanding this much can lead to a deep misunderstanding of the brain as a kind of passive sponge that absorbs sense data and then classifies and reflects on it based on being given “true” answers.

This is another place where introspection is deeply misleading. We tend to self-reflect on cognition as a series of discrete steps: perception, understanding, decision and then action. We even see these four stages as owned by fundamentally different realms. Perception is the domain of tastebuds and touches, of eyeballs and ears. Understanding is the job of the brain. Decision is a matter of will. And action is a thing of the body. As if our eyeballs went on seeing without the brain, the body went on acting (randomly perhaps?) on its own, or the will was a little homunculus living in the penthouse suite of the brain. Not only are all these functions part and parcel of cognition in the brain, they are entwined and executed differently than the linear multi-step process we imagine.

Modern neuroscience understands the brain as an active prediction machine. This holds true at every level of the brain all the way down to the basic levels of sense perception.

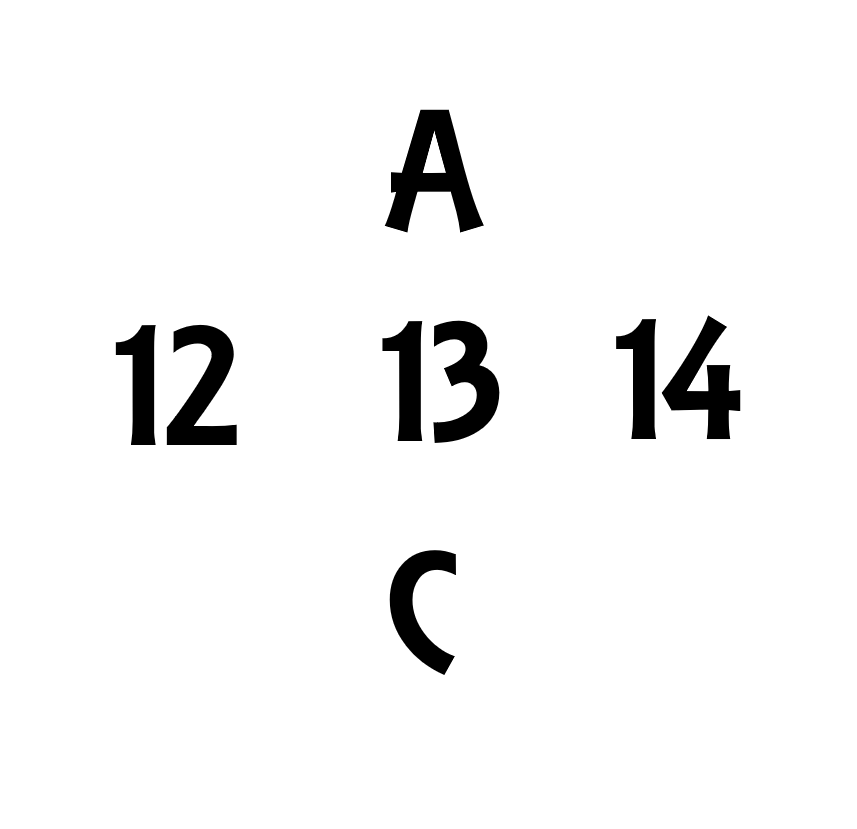

Take a second to read the following diagram top-to-bottom.[1]

Now read it again left to right.

Then take a moment to reflect on the middle letter/number. If you’re like me (and most people), when you read top down you saw that middle-character as a B. A-B-C. When you read left-to-right, you saw it as a 13. 12-13-14.

In each case, the black marks on the page are identical. But you effortlessly and unconsciously read the middle character based on its role in a sequence.

What’s going on here?

This little puzzle is a remarkable example of the way the brain functions and it’s the secret to explaining how we learn without a world labeled for our convenience. We are not seeing what is on the page, we’re seeing what’s on the page measured against what we expect to be there. When the brain sees a puzzle beginning with an A and ending in a C it will very naturally shape what is in-between into a B. A top-down prediction (B likely follows A) is meeting bottom-up data (lines that look kind of like a B). Yes, that thing in the middle must be “B” like for the prediction to surface. Put an X in the middle, and the illusion will go away. This is lucky. What we’re seen isn’t invented by our minds. It’s interpreted by our minds. There’s a difference.

Here’s how Andy Clark puts it: “…just about every aspect of the passive forward-flowing model is false. We are not cognitive couch potatoes idly awaiting the next input, so much as proactive predictavores – nature’s own guessing machines forever trying to stay one step ahead by surfing the incoming waves of sensory stimulation.”

Here’s where the connectionist architecture of the brain and top-down prediction come together to solve the training data problem. The brain isn’t one single big connection system. It’s a whole array of semi-modularized connection systems in which the output of one system becomes the input to the connection system above it.

This architecture lets the brain do something extraordinary. By arranging those modular connection systems into a hierarchy, it can take the output of one system and then feed it into the one above it. Now suppose that system one level up is predicting what the system below it will generate next. Each moment becomes training data – with the label (the right answer) attached in the next observation from the lower-level system. In this kind of architecture, predictions will reach all the way down to the sense data coming in at the most basic level. When that happens, not only is every moment a learning opportunity (with the label to follow in the next), but the world becomes the ultimate source of truth.

This multi-level prediction is the brain’s way of generating training data. By predicting the output of its own systems, the brain’s many connection systems can learn by using the systems beneath them. Modern neuroscience pictures the brain as a voracious hierarchical (or networked) prediction machine in a constant, never-ending whirl of interconnected processing. This is profound, elegant, beautiful, and astonishing. The brain is its own guide, its own teacher, and its own source of truth. It just needs a world to give it experience.

Building top-down models isn’t just a clever way to create training data. Prediction turns out to be a dramatically better process for survival than contemplation. It’s easy to forget that from an evolutionary perspective, understanding has value only in the context of enabling better action. So, we can be pretty sure that the point of all the prediction going on in our brains isn’t better understanding, it’s better action. The understanding that results from prediction is a by-product of making good decisions.

Given that, why would our brains evolve as prediction machines – why is prediction better than reflection?

Animals with very basic neural structures use their brains for straightforward responses to key stimuli in the environment. Avoiding danger. Finding food. These response engines process simple stimuli and return straightforward action paths. But it turns out that more complex decision-making requires more than simple stimulus-response engines can provide. To react quickly to danger or opportunities, you need to be able to key in on important facts. Notice deviations. And respond. FAST!

And here’s where prediction systems shine. Because while building the models necessary to make predictions is a LOT of cognitive load, it’s worth it because predicting what will happen is better than reacting to what has happened. A good prediction system will outperform a good response system when it comes to effective action.

A chess player who only reacts to an opponent’s move will rarely beat a chess player who strategizes three or four plies ahead. Natural selection makes survival a kind of high-stakes chess game, and effective action is what natural selection will tend to favor. So regardless of whether you are Magnus Carlson or hopeless even at checkers, your brain is a master at the most important skill necessary to play the game of life: prediction.

[1] This example and much of the core content here is from Surfing Uncertainty by Andy Clark